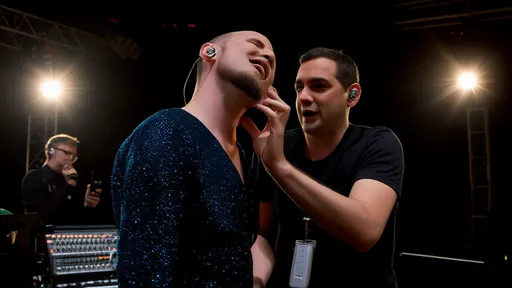

The broadcast industry is witnessing a paradigm shift in multi-camera production as new synchronization technologies emerge to tackle the perennial challenge of audio-video alignment. While lip-sync issues have plagued live productions for decades, recent advancements in timing protocols and network-based solutions are finally delivering frame-accurate synchronization across complex multi-camera setups.

Traditional synchronization methods relying on genlock and timecode have served the industry well for years, but they're increasingly showing their limitations in modern IP-based production environments. The migration to software-defined infrastructures and cloud-based production has created both challenges and opportunities for achieving perfect sync. Broadcast engineers are now implementing sophisticated timing architectures that maintain synchronization across distributed production sites and remote contributors.

At the heart of modern synchronization solutions lies the Precision Time Protocol (PTP), specifically the SMPTE ST 2059 standard that has become the cornerstone of broadcast synchronization. Unlike traditional methods that required separate cabling for video and audio sync, PTP enables precise timing distribution over the same IP network used for media transport. This network-based approach allows for microsecond-level accuracy across all devices in the production chain.

The implementation of PTP requires careful network design and configuration. Broadcast facilities must ensure proper grandmaster clock placement, network switch configuration, and endpoint device compatibility. The latest generation of broadcast equipment now includes built-in PTP support, making integration more straightforward than ever before. However, achieving reliable synchronization still demands thorough system design and continuous monitoring.

Audio synchronization presents particular challenges in multi-camera productions. While video frames can be aligned relatively easily, audio requires sample-accurate synchronization to avoid perceptible delays. Modern systems employ advanced buffer management and jitter reduction techniques to maintain audio integrity across multiple sources. The AES67 audio-over-IP standard has been instrumental in achieving this, providing a common framework for audio synchronization across different manufacturers' equipment.

Multi-camera productions often involve mixed formats and frame rates, adding another layer of complexity to synchronization. Productions might combine traditional broadcast cameras with consumer-grade devices, smartphones, or webcams, each with different timing characteristics. Sophisticated synchronization systems now include automatic format detection and compensation algorithms that can adjust timing dynamically based on each source's characteristics.

The rise of remote production has introduced new synchronization challenges. With cameras and production equipment distributed across multiple locations, maintaining precise timing requires robust wide-area network solutions. Broadcasters are implementing advanced timing distribution systems that can maintain synchronization across hundreds of kilometers, using both dedicated fiber networks and public internet connections with sophisticated timing recovery mechanisms.

Latency management remains critical in live productions where even milliseconds matter. Modern synchronization systems incorporate real-time latency measurement and compensation across all production elements. This includes not just cameras and audio sources but also graphics systems, replay devices, and streaming encoders. The entire signal path must be carefully timed to ensure perfect synchronization at the viewer's endpoint.

Monitoring and diagnostics have become increasingly sophisticated in modern synchronization systems. Broadcast engineers now have access to real-time dashboards that display synchronization status across all sources, with automated alerting for any drift or loss of sync. These systems can predict potential issues before they become visible to viewers, allowing for proactive maintenance and troubleshooting.

The future of multi-camera synchronization looks toward even more integrated solutions. Emerging technologies like 5G timing synchronization and cloud-based timing services promise to make high-quality synchronization more accessible and cost-effective. As artificial intelligence and machine learning technologies mature, we can expect to see self-correcting synchronization systems that can automatically adjust timing parameters in real-time based on content analysis.

Standards development continues to play a crucial role in synchronization technology. Industry organizations like SMPTE, EBU, and IETF are working on new standards and recommendations to address emerging challenges in IP-based production. These efforts ensure interoperability between different manufacturers' equipment and provide clear guidelines for implementation.

Despite the technological advancements, human factors remain important in synchronization systems. Training for production staff and engineers must keep pace with technological changes. Understanding the principles of IP-based synchronization and knowing how to troubleshoot modern systems are becoming essential skills in broadcast operations.

The business impact of reliable synchronization cannot be overstated. In an increasingly competitive media landscape, viewers have little tolerance for technical imperfections. Broadcasters who invest in robust synchronization solutions not only ensure better viewer experiences but also future-proof their operations against evolving production requirements and emerging formats like 4K, HDR, and immersive audio.

As we look toward the next generation of broadcasting, synchronization will remain a fundamental requirement. The technologies may change from SDI to IP to cloud-based solutions, but the need for perfect audio-video alignment will only become more critical as production complexity increases and viewer expectations continue to rise.

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025